ABAP in the cloud

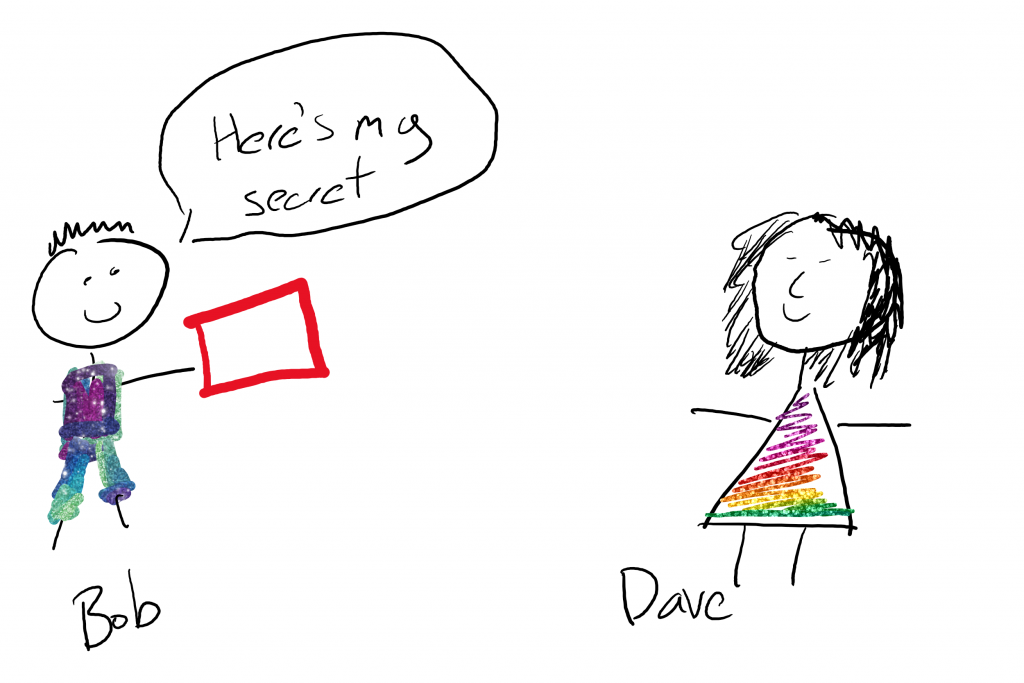

Michael Koch kicked it all off with a tweet,

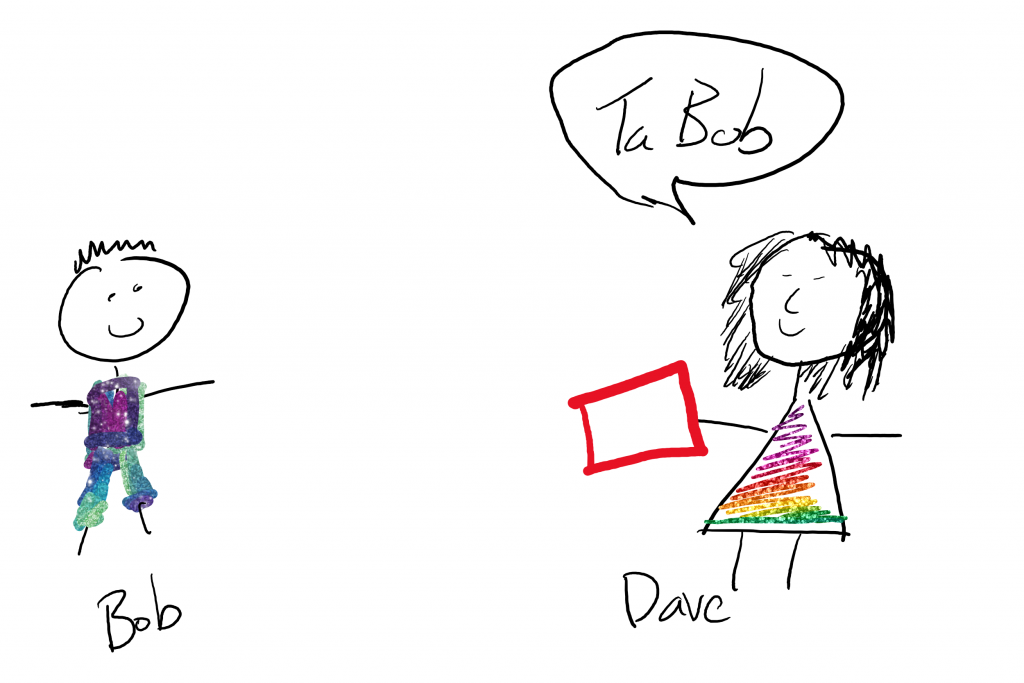

to which of course I had to reply:

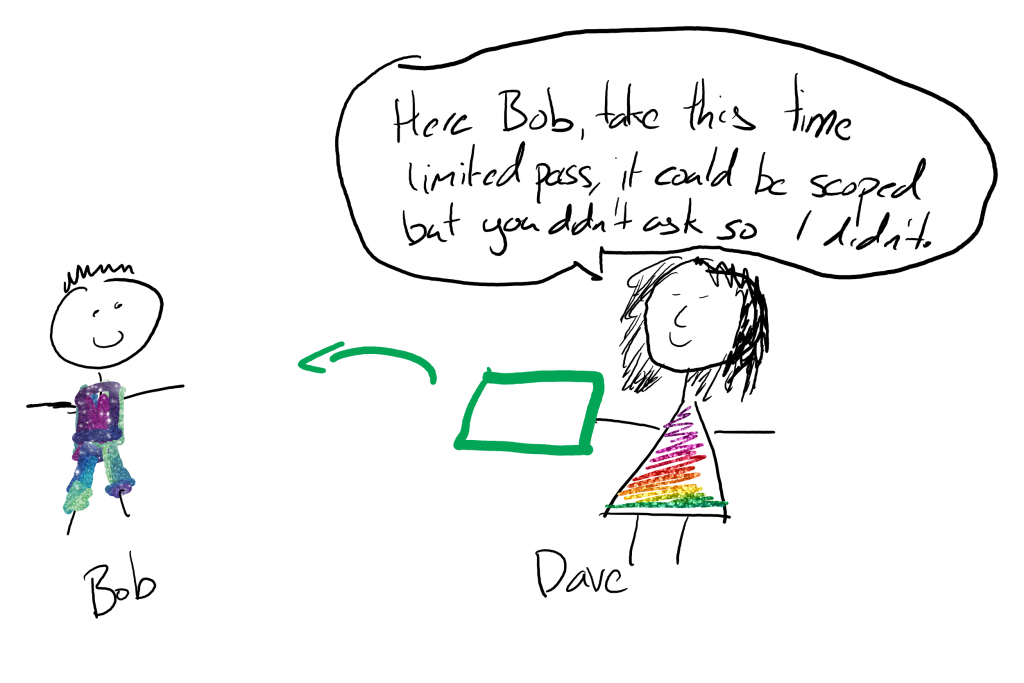

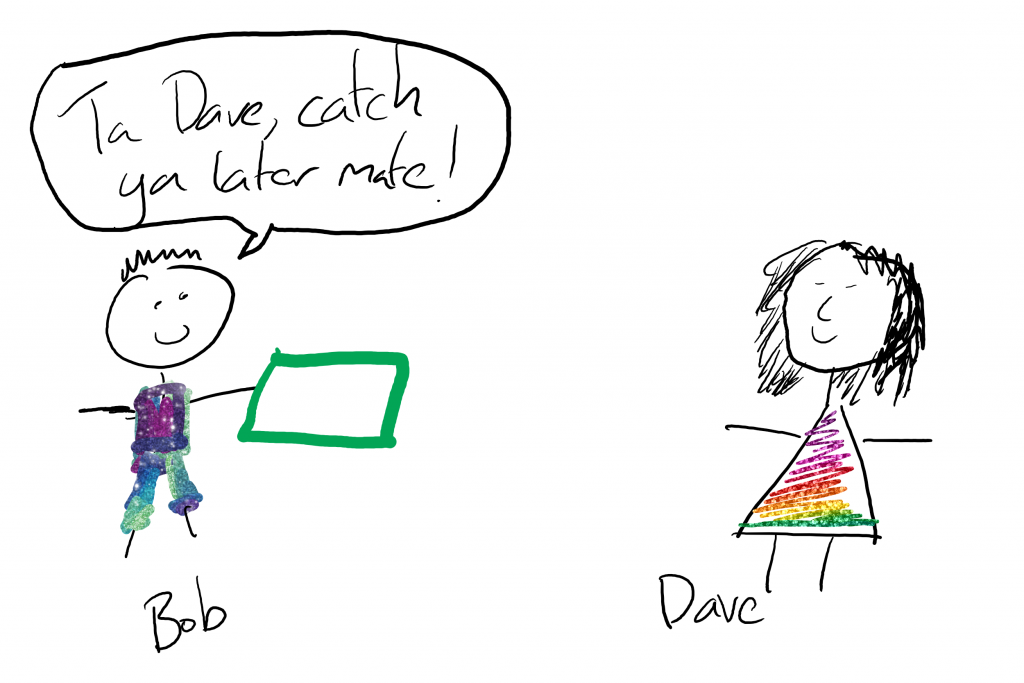

then I was prodded:

and prodded:

and then James beat me to the blog:

and if you haven’t read James’ post, please do, it is excellent.

So whilst I’m waiting to hear how much it’s going to cost me to fix my car of which the engine has decided to stop working whilst on the way to work today, I thought that rather than drinking a bottle of Pinot Gris and attempting to forget about the shitty waste of a day I’ve had, I’d do something useful, productive (this post), and drink beetroot, apple, ginger and celery juice instead.

So here are thoughts upon which I will rant.

- ABAP is a proprietary language which make its code costly to support.

- Building for cloud is far more than just supporting cloud systems.

- If you love ABAP to the exclusion of everything else that’s your bed, you lay in it. I like beetroot juice, I am so going to have pink pee later.

- Java is the boring enterprise language of choice.

- A PaaS really should be language agnostic, if not it’s a pretty crappy PaaS.

- Why on earth have we ended up here? Who is paying for this?

- Evolve or die.

These are all going to get intermixed in this rant, but I will still try to address them one by one.

Firstly, on the joys of ABAPers. I have discussed and even written about this, and it may just be the particular markets where I play, but it’s damn hard to find a good and excited ABAPer. People don’t learn the language unless they want to work on SAP products. Imagine how quickly that strips out the fun people. But where people have got good ABAP skills, they tend to have far more than that, also great business process understanding (Robbo has recently written about this https://blogs.sap.com/2017/10/02/abap-in-sap-cloud-platform-why/ ) Have a read, especially if you fall into the ABAP diehards camp, it will make you feel much happier than this blog post will.

But because the good ABAP folk have such great depth of business process understanding, they command a reasonable rate – and why not having a BA and a coder in one is a bit of a win is it not? So they are expensive. One hopes because they deliver better, but I find this is not true. They just cost more. But you have to have them to support the huge monolith that is your SAP ERP system. So embedded in companies around the world are these folk who can code ABAP, understand their systems and are if not well paid, expensive to have hanging around.

And you won’t find someone off the street who has just learnt ABAP who is useful, because the skill in ABAP isn’t in the language, it’s in understanding the existing library of standard code and frameworks that you can use to get things done.

FFS the language still doesn’t have the concept of a Boolean!

The requirement for ABAP support is one of the reasons that SAP costs a decent amount to run. In the future as we move to S/4HANA public cloud (and we will, slowly but inevitably) cost saving will be essential. ABAP costs, so get rid of it in the equation. Out-source your custom development, even better, purchase it as SaaS from someone else, are you a custom software development house? No – they why do you try to build your own software? Concentrate on dishwasher powder, chocolate bars, beer or whatever it is you have as your core.

If we start building cloud extensions in ABAP we are locking down the list of people who could support them. This will cost us extra. Having worked with SaaS for the last few years, I can clearly state, cost of delivery is far more important now than it ever was on-prem. The expectations of customers are different. They will not pay the same amount to build an extension as they paid for the SaaS solution it enhances. ABAP ain’t cheap, and neither are ABAPers.

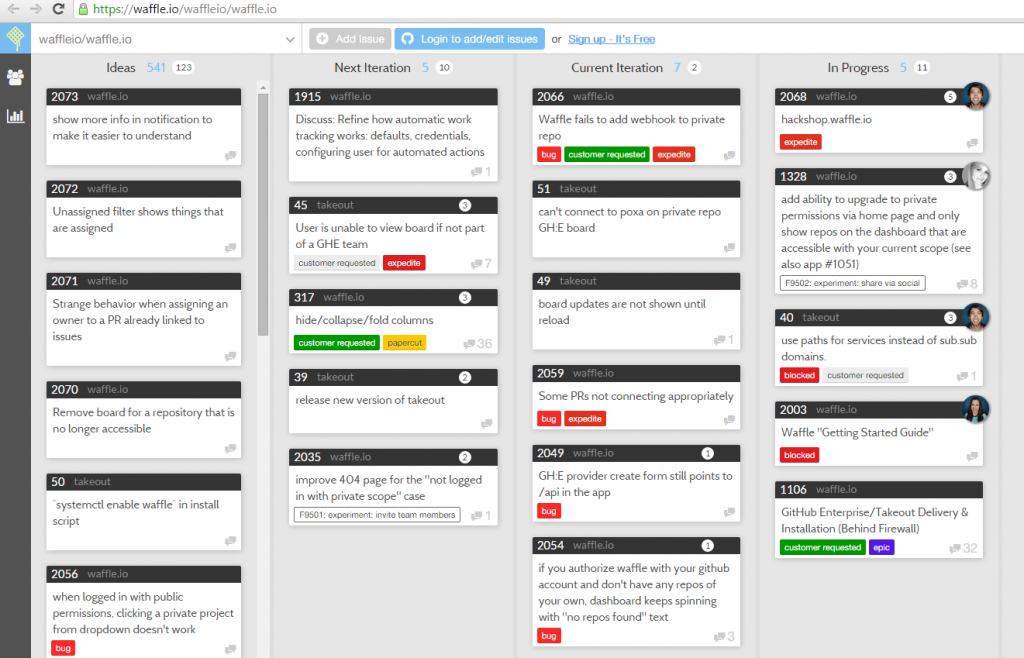

I don’t think ABAP and it’s whole lifecycle management is really well designed to build cloud apps. James mentioned some great points in his blog around dependency management, and how ABAP doesn’t support non-linear and project based development (hopefully ABAPGit will help here, the official voice of support from SAP is very encouraging.) But having spent the last 5 years build cloud apps that integrate to SAP systems, I have been so impressed by the huge amount of standard tooling and functionality that is available for projects outside of SAP. Like have you used Maven? It’s fricking awesome! To consider even thinking about managing the huge number of libraries that I use in most of my builds to do without this tooling would be unthinkable. Since James was probably more detailed and eloquent on this point I will stop there. But really, even if SAP support ABAPGit there is a hell of a long way to go to even think of being put into an imaginary cloud development language magic quadrant chart, let alone featuring anywhere but bottom left.

#ABAPisntDead. No of course it isn’t, there will be legacy on prem apps that will run and people will make businesses out of it, like those Rimini Street folk. But if you can’t see anything out there other than ABAP, my goodness you are short sighted. Any good programmer out there should be able to code in js (server side or browser), and should have a grasp of at least 2 other languages. If you can only deal with one, you’re not a programmer, you’re a liability for the people you work with. Having multiple skills is important, and it’s also important to know when to use them. Enlighten yourselves people, there is a whole world full of cool shite out there, go and have a look. If my post infuriates you because you believe that ABAP is the best thing ever, awesome, both for you and for me, because you have passion, go and use it, and me because it means I actually got some people who don’t agree with me to read this.

Java is boring, and safe, and commodity. And that is exactly what businesses love. You want something that is reliable, has been proven, does the job. Moreover, you want bucket loads of libraries that other people have built and tested that can do the things you want to do. Whilst I built an implementation of TFA that was compatible with Google’s TFA Authenticator app in ABAP, it was a pain in the arse, and hasn’t been updated since I wrote it and then worried about releasing it as open source because you weren’t allowed to do that with ABAP. There’s a standard lib for Java. Standard boring languages are the bedrock of good enterprise builds. I do like to play with server side js, (aka Node) but i’m still a sucker for strongly typed languages.

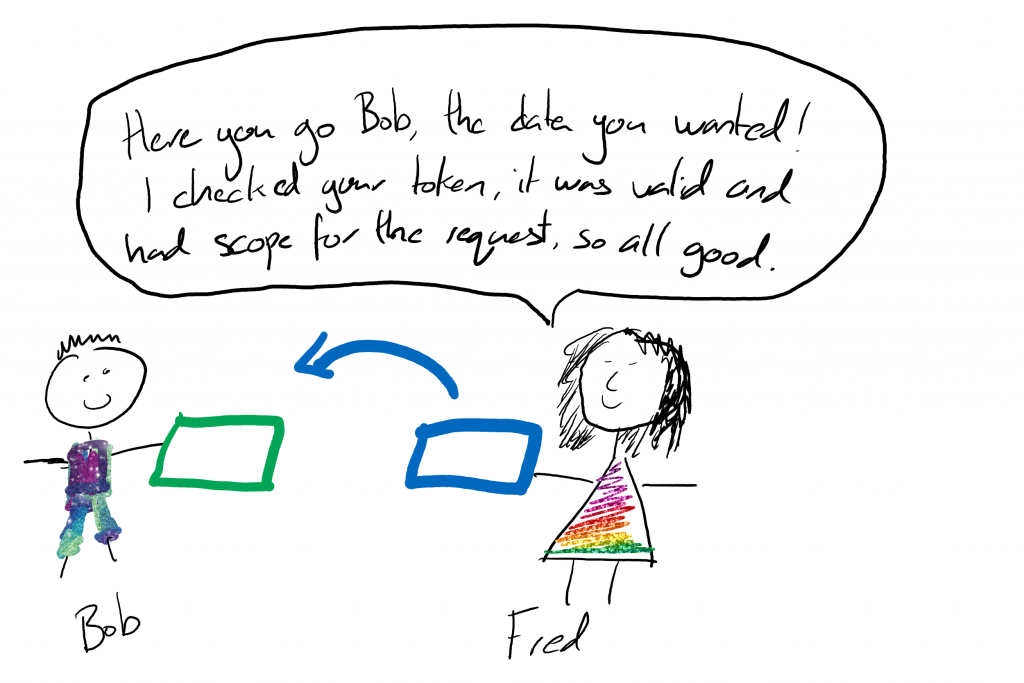

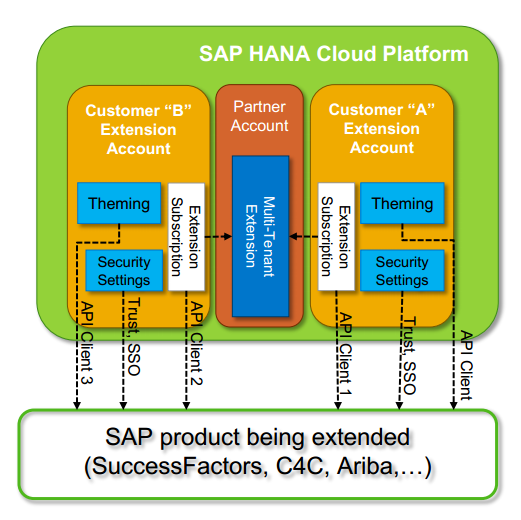

But if you don’t like Java, then awesome, choose something else. Indeed it should not matter what you choose, because any PaaS you build on should be language agnostic when it comes to providing services to you to consume. If you’re not consuming any services from your PaaS then you missed the memo about cloud development, please go back to your application server. A PaaS offers micro-services that should be able to be consumed by any application running on that platform. This inherently makes those services consumable in a fashion that is hard to use for ABAP and pretty standard for every other language. I’m sure that SAP could wrap their services into a consumable layer that would be easier to use in the Cloud based ABAP. But this then means we start losing one of the best bits of the PaaS, that it shouldn’t favour any runtime. We’ll see how this story plays out…

Which kinda segues into my next worry/rant/observation. How did we get here that a language that really isn’t suited to cloud extension ends up as an officially supported run time in SAP’s CF PaaS? This goes back to my original tweet.

I believe that it is clearly SAP’s strategy to move to the largest part of their revenue coming from public cloud based SaaS solutions (including ERP). Btw, I think this is a sound strategic vision, because if they don’t pivot to get there, someone else will take that space. The on-prem model will not make as much money in the future, todays small companies are tomorrows giants, and with SaaS solutions they don’t need to migrate/upscale, they will keep the solution they buy today. SAP needs to be in that space, and they need credibility that comes from large customers being there too.

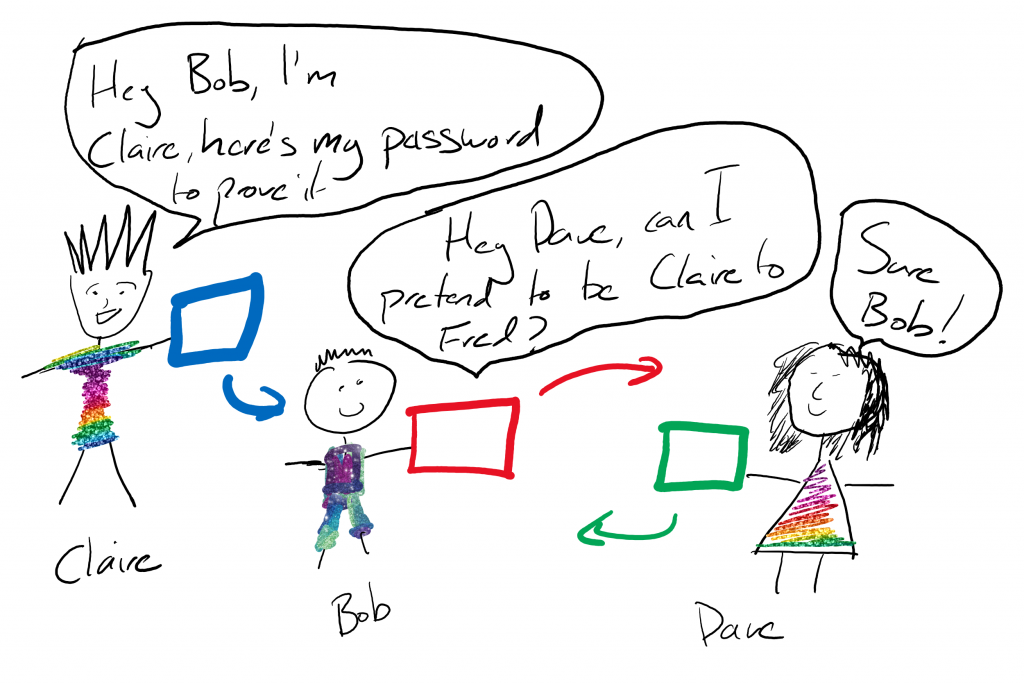

To this end I envisage SAP have been discussing moving some very influential customers to the public cloud. Those customer, I would guess, have responded that they don’t want to loose their current people or custom build investments.

The obvious solution from SAP is to put together an ABAP cloud runtime. I cannot be cheap to do this though. The effort to make ABAP into a secure and lightweight containerizable solution will not be something that a team will do in a week or two. There must be some sound and solid business reasons to do this. For all the reasons I have previously mentioned I believe that if companies want to extend SAP SaaS solutions, they should think about using other languages, not ABAP. But I fear this is not about making a better solution, it is about making a marketable one. If customers believe that they can extend the value of their existing investments and also benefit from moving to SaaS based solution, that is a great sales pitch. It’s having your cake and eating it.

This vision (even if it doesn’t work out to be the reality) of a simple gateway to moving to SaaS ERP is what I believe we are now being sold. This isn’t a story for developers, this is a story for the high level execs that sign the S/4HANA subscriptions.

I hope that a cloud based ABAP will be the gateway that enables some organisations to get off the on-premise mode and head to the cloud. What I fully expect is that once they are there, they will realise that there are better and more supportable ways to extend. That would be great. In the meantime I fear that we start bringing non-cloudy ways of working into the cloud landscape, this will likely cause failed/cost overrun projects. We run the risk of preferring Cloud ABAP as a way to interact with S/4HANA cloud, that would be disastrous.

It has been suggested that Cloud ABAP will potentially be the solution that encourages adoption of the SAP Cloud Platform. I just hope it isn’t the solution that kills it. I would much rather the money being spent of putting ABAP into the cloud is used to handle some of the other issues I see with SAP CP, but clearly there is a view that it will be a return on investment.

Then again, if you’re not trying new stuff and making mistakes, you’re not learning. If you’re not learning, you’re falling behind. So here’s to making mistakes and learning! To steal the excellent closing lines from James’ post:

So buckle up because there’s no turning back at this point. It’s either evolve or die.

I look forward to a lively debate on this topic.

(James Wood – https://blogs.sap.com/2017/10/04/abap-in-the-cloud-is-this-a-good-thing/)

James, I couldn’t say it better mate. Although I would refer to the platform as SAP CP 😉

I think SAP Cloud Platform is and will be a key part of the story of SAP’s and customers’ evolution to the cloud. If it takes putting an “runs ABAP” badge on it, to get people to see how useful it is, I’ll deal with it. But for sure, it would not be my recommendation to any organisation that it would be best practice. I’ll keep an open mind, perhaps it will be one day, if so I’ll adapt and evolve – because that’s what you should do.

As always, my own thoughts, not my company’s, please feel free to jump onto SCN and reply to James’ post. I’ll probably read those comments as well as whatever gets posted on twitter.